In 1876, Western Union turned down an opportunity to buy the patent rights to the telephone for $100,000. The company's leadership couldn't see how a device that required two people to be connected by a wire at the same time would ever compete with the telegraph. Fifteen years later, there were over 150,000 telephones in the United States. Western Union had missed one of the great business opportunities in history.

But here's the thing. If you were sitting in Western Union's offices in 1873, their decision would have made perfect sense. The telephone was unproven technology. The infrastructure didn't exist. Nobody knew what a viable business model looked like. Any attempt to value the opportunity using traditional financial analysis would have produced a number close to zero.

This is the problem that haunts every genuine innovation. The very tools we use to make rational investment decisions break down precisely when the opportunities are largest and most transformative.

The pattern keeps repeating

In the 1840s, Britain experienced railway mania. Hundreds of railway companies formed, raised capital, and began laying track. Most failed. Investors lost fortunes. But by 1850, Britain had built most of the rail network that would power its economy for the next century.

In the 1920s, America experienced a similar mania around automobiles and radio. Hundreds of car companies emerged. Nearly all disappeared. But the survivors, Ford and General Motors, became the backbone of American manufacturing. The radio networks that survived became CBS and NBC.

In the 1990s, the pattern repeated with the internet. At the peak in early 2000, the Bloomberg US Internet Index tracked 100 young internet companies worth a combined $2.9 trillion. By 2003, the index had lost 93% of its value and Bloomberg stopped calculating it. More than two-thirds of the publicly traded internet retail companies from March 2000 failed completely. But Amazon survived. So did eBay. The infrastructure they built on, both physical and conceptual, powered the next two decades of digital commerce.

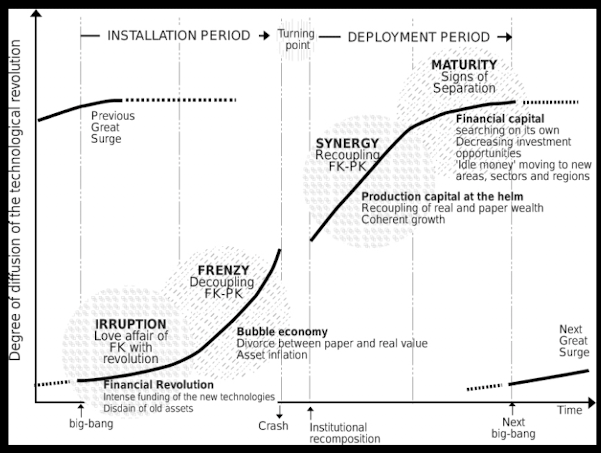

The economist Carlota Perez spent decades studying these patterns. She noticed something that most observers missed. These booms and busts weren't random. They followed a predictable structure tied to what economists call general-purpose technologies: fundamental innovations like steam power, electricity, or the internet that transform entire economies.

Perez identified two distinct phases. First comes installation, a period of financial frenzy where speculation runs wild and massive amounts of capital flow into building infrastructure. Then comes deployment, where the focus shifts from building capacity to actually using it productively. The fortunes made in each phase go to different people. Installation rewards traders and dealmakers. Deployment rewards operators who figure out how to generate profits from the infrastructure everyone else built.

Why smart people keep making the same mistake

In 2015, finance professors Bradford Cornell and Aswath Damodaran decided to investigate whether the pattern was playing out again in online advertising. They looked at every publicly traded company with significant online advertising revenue and ran a simple thought experiment. Given each company's market value, what would its revenues need to be in ten years to justify today's stock price?

The math required assumptions. They picked generous ones. A 9% cost of capital, lower than reality. Operating margins of 20%, higher than most companies would achieve given increasing competition. Even with these favorable assumptions, the numbers didn't work.

The total future revenues implied by the market capitalizations added up to $522 billion in 2025. But the entire global advertising market in 2014 was only $545 billion, with $138 billion from digital. Even assuming aggressive growth in total advertising and digital reaching 50% of the market, the online advertising market in 2025 would be around $466 billion. The public companies alone were priced for more revenue than would exist in the entire market. And this calculation didn't include smaller public companies, private companies, or secondary revenue streams from large companies like Apple.

This is what Cornell and Damodaran call the big market delusion. When a genuinely large opportunity emerges, something strange happens to collective decision-making. Individual entrepreneurs and investors, each making locally rational choices, produce a collectively irrational outcome.

The mechanism is overconfidence. Not the casual kind where someone thinks they're a better driver than average. The deep, structural kind that researchers have documented in study after study. In one survey of nearly 3,000 entrepreneurs, 81% believed their chance of success was at least 70%. One-third thought success was guaranteed. The actual failure rate for new businesses is 75% within five years.

Venture capitalists show the same bias. Zacharakis and Shepherd studied 53 VCs from Denver and Silicon Valley and found they were not only overconfident but became more overconfident with more information. Another study measured what they called "Bell's disappointment," the gap between anticipated and actual payoffs. Venture capitalists scored higher than other investor groups on both overconfidence and eventual disappointment.

This isn't irrationality. It's selection bias. The people who become entrepreneurs are those who believe, against the odds, that they can succeed where others fail. The people who become VCs are those who believe they can spot winners that others miss. You need these personality types to fund the experiments. A perfectly rational assessment would lead to underinvestment in genuinely transformative opportunities.

The economist Joseph Schumpeter understood this intuitively. He called the entrepreneur's willingness to plunge into uncertain ventures despite overwhelming odds "the dream and the will to found a private kingdom." Schumpeter saw that progress required people who were, by conventional standards, slightly unhinged. Their overconfidence wasn't a bug in the system. It was the feature that made the system work.

The AI wave and the infrastructure build-out

Right now, we're watching this pattern unfold again in artificial intelligence. And this time, the scale is unprecedented.

Microsoft announced in January 2024 that it would spend $80 billion on AI-enabled data centers over the following year. Not over five years or a decade. One year. Amazon, Google, and Meta are each planning similar levels of capital expenditure. Oracle announced plans for data centers requiring more than a gigawatt of power each. For context, a gigawatt is roughly the output of a large nuclear power plant.

By November 2025, Anthropic announced a $50 billion investment in U.S. AI infrastructure, starting with custom data centers in Texas and New York expected to come online in 2026. This brings the total announced infrastructure commitments from major AI players to unprecedented levels. In 2Q25 of 2025 alone, organizations increased spending on AI infrastructure by 166% year-over-year, reaching $82 billion for that quarter alone. The International Data Corporation projects that AI infrastructure spending will reach $758 billion by 2029.

OpenAI's Stargate project exemplifies the scope of this expansion. The project announced in January 2025 with an initial $100 billion commitment to reach $500 billion has accelerated ahead of schedule. By September 2025, OpenAI, Oracle, and SoftBank announced five new data center sites, bringing total planned capacity to approximately 7 gigawatts with over $400 billion in investment—putting the project on track to secure the full 10-gigawatt, $500 billion commitment by the end of 2025, ahead of schedule. The flagship Abilene, Texas site is already operational and running early training and inference workloads using NVIDIA GB200 racks delivered by Oracle in June 2025.

The hyperscalers are in an arms race. Each believes that AI will transform computing and that the winners will be those who build the most capacity fastest. They're betting tens of billions of dollars on this belief.

Behind them, a new category of infrastructure companies has emerged and is scaling rapidly. CoreWeave, founded in 2017 as a cryptocurrency mining operation, pivoted to providing GPU cloud services for AI workloads. The company went public in March 2025 and is initially traded 200% above its IPO price. Lambda Labs, Crusoe Energy, and a dozen other "neo-clouds" are racing to build specialized infrastructure for AI training and inference. Neocloud providers, which reached $5 billion in quarterly revenues in Q2 2025, are projected to reach nearly $180 billion in revenues by 2030. The neocloud market reached $24.07 billion in 2025 and is expected to surge to $173.61 billion by 2030, a 48.46% CAGR.

Then there are the utilities. American Electric Power announced plans to invest $55 billion in grid infrastructure and generation capacity through 2029, much of it driven by projected AI data center demand. Constellation Energy restarted Three Mile Island's undamaged reactor specifically to power Microsoft data centers, signing a 20-year power purchase agreement in September 2024 with operations expected to begin in 2027 (accelerated from the original 2028 timeline). Utility stocks, boring dividend plays for decades, became growth stories.

Add it all up and the capital flowing into AI infrastructure exceeds anything seen in previous technology waves. For comparison, at the peak of the dot-com boom in 2000, total venture capital investment across all sectors was about $100 billion. By 2025, AI infrastructure spending alone has surpassed this annual rate by multiples.

Is this rational? Nobody knows. That's the point.

The option premium view

Think about how financial options work. You pay a premium today for the right, but not the obligation, to buy or sell an asset at a specific price in the future. Most options expire worthless. But the ones that pay off can return multiples of the premium paid. A portfolio of options has positive expected value even though most individual options fail.

Now let's apply this logic to technological innovation. When a general-purpose technology emerges, nobody knows which specific applications will create value. Will online retail work better as pure-play digital stores or hybrid models combining physical and online? Should social networks charge subscription fees, sell advertising, or find some other revenue model? Will AI make money through foundation models, specialized applications, or infrastructure services?

The only way to find out is to fund multiple approaches simultaneously and see what works. Each startup is essentially an option on a different configuration of the technology. Most will expire worthless. But the few that succeed can return extraordinary value. The collective "overpricing" that observers call a bubble is actually society paying an option premium to run these experiments in parallel.

This option premium buys something that cannot be obtained any other way. You cannot determine in advance which business model will work. You cannot run a controlled experiment. You cannot analyze your way to the answer. The knowledge only emerges through real-world trial and error, and trial and error requires funding actual companies that will mostly fail.

Cornell and Damodaran make this explicit in their paper. The dot-com bubble "did change the way we live, altering not only how we shop but also how we travel, plan, and communicate with each other." The enthusiasm for big markets creates price volatility, "but it is also a spur for innovation. The benefits of that innovation, in our view, outweigh the costs of the volatility."

This is a radically different way of thinking about bubbles. The standard view treats them as market failures, temporary insanity that destroys value. The option premium view treats them as a feature of healthy capital markets, a mechanism for funding exploration that rational analysis would reject.

Here's what we know for certain about AI: the technology works. Large language models can perform tasks that were impossible three years ago. They can write code, analyze documents, generate images, and engage in reasoning that looks increasingly like human cognition.

Here's what we don't know: which business models will capture value. OpenAI charges per token for API access. Anthropic offers both API and consumer subscriptions. Google is integrating AI into existing products. Meta is giving models away for free, betting on an advertising-supported ecosystem. Microsoft is selling AI as part of enterprise software bundles.

We also don't know what the sustainable cost structure looks like. Training costs for frontier models are dropping rapidly as algorithms improve, but they're still measured in tens of millions of dollars per model. Inference costs are falling even faster, but the volume of queries is growing exponentially. Will the unit economics work at scale? What will competitive dynamics do to pricing power?

We don't know what the limiting constraints will be. Is it chip supply? Nvidia can't manufacture H100 GPUs fast enough to meet demand, and competition from AMD and other manufacturers is intensifying. AMD's CEO announced in November 2025 that AMD expects 35% annual revenue growth driven by "insatiable" demand for AI chips, with AMD's AI data center business to account for most growth over the next 3-5 years. Is it power? AI data center power demand is escalating dramatically—McKinsey forecasts that U.S. data center energy demand will rise from 224 terawatt-hours in 2025 to 606 terawatt-hours in 2030, a 170 percent increase. The Department of Energy projects that data centers will consume between 6.7% and 12% of total U.S. electricity by 2028, compared with 4.4% in 2023. By 2035, Deloitte estimates that power demand from AI data centers in the United States could grow more than thirtyfold, reaching 123 gigawatts, up from 4 gigawatts in 2024. Is it cooling? High-density GPU racks generate enormous heat. Is it talent? The number of people who can train and deploy large models remains scarce.

And we definitely don't know which layer of the stack will capture value. Will it be the foundation model providers? The infrastructure companies? The application layer? The picks-and-shovels businesses selling components? History suggests the answer is rarely obvious in advance.

What we're witnessing is the paying of an option premium at massive scale. The hyperscalers are collectively spending hundreds of billions of dollars to build capacity that may or may not be needed. They're doing this because each believes it will be among the winners and because the cost of being wrong by underinvesting exceeds the cost of being wrong by overinvesting.

The neo-clouds are betting that specialized infrastructure for AI workloads will command a premium over general-purpose cloud computing. They're raising capital at valuations that assume this bet pays off. Most will likely fail. But the capacity they build will remain, available for whoever figures out how to use it productively.

The utilities are betting that AI data centers represent decades of sustained demand growth. They're making thirty-year infrastructure investments based on projections that could easily prove wildly optimistic. But if they don't build the capacity and the demand materializes, they'll miss the opportunity entirely. By 2030, the US' data center electricity demand could double to 409 terrawatt-hours, with AI expected to drive most of this increase. Bain projects that by 2030, US data centers could consume about nine percent of the country's total electricity, more than double today's share and roughly 150 TWh above baseline outlooks.

This is the option premium in its purest form. Nobody has enough information to make a fully rational decision. But the potential payoff for being right is so large that it justifies building redundant capacity. The "waste" in the system is actually buying something valuable: parallel exploration of the possibility space.

Knowledge emerges from overbuilding

Go back to the railway mania of the 1840s. Britain built far too much rail capacity far too quickly. Hundreds of railway companies went bankrupt. Investors lost fortunes. But by 1850, Britain had a national rail network that would power its economy for a century.

The overbuilding wasn't waste. It was discovery. Nobody knew in 1840 where rails should go, what gauge tracks should use, how stations should be designed, what pricing models would work, or how to manage a network of unprecedented complexity. The only way to figure this out was to build many railways, watch most fail, and learn from the experiments.

The companies that laid track in unprofitable routes generated knowledge about where rails shouldn't go. The companies that used incompatible gauges revealed the need for standardization. The companies that experimented with different business models revealed which approaches created value. The successful railways inherited all this knowledge without paying for it.

The same pattern played out with electricity. In the 1880s and 1890s, hundreds of local utilities formed, each building generating capacity and distribution networks. Most failed or were acquired. But by 1920, America had an electrical grid that would enable decades of productivity growth. The overbuilding in the installation phase made the deployment phase possible.

Cornell and Damodaran document this explicitly in their dot-com case study. Yes, two-thirds of internet retail companies failed. Yes, investors lost trillions of dollars of paper wealth. But the survivors built their businesses on infrastructure that the failures created. Amazon didn't have to figure out payment processing, customer service protocols, warehouse management systems, or last-mile delivery logistics from scratch. Dozens of failed companies had already run those experiments.

The cannabis case study shows the pattern compressed into a single year. By October 2018, the ten largest cannabis companies had a combined market cap exceeding $48 billion. By October 2019, they had lost more than half that value. Tilray fell dramatically—reaching $300 per share in September 2018 before crashing to $123 by the following week, and eventually declining to around $2.95 by 2020. Aurora and other major players also experienced 70% declines. But the knowledge generated during that year of overinvestment remains. The industry now knows far more about cultivation at scale, regulatory compliance, distribution channels, and consumer preferences than it did before the boom.

With AI, we're in the early stages of paying the option premium. The hundreds of billions flowing into infrastructure are funding experiments about data center design, chip architecture, power management, cooling systems, networking, and software optimization. Most of these experiments will fail or prove suboptimal. But the winners will inherit the knowledge.

We're also discovering constraints in real-time. The power bottleneck is already apparent. Oracle had to adjust data center plans when the design required a gigawatt of power and the only way to get that much capacity on short notice was to co-locate with a nuclear plant. By late 2025, it's clear that energy is the critical limiting factor. Deloitte reports that grid stress is the leading challenge for data center infrastructure development, with 79% of surveyed power company and data center executives saying AI will increase power demand through 2035. Behind-the-meter (BTM) power generation—including natural gas, rooftop solar, and even nuclear plant restarts—has become the go-to source for data center operators, shifting timelines and decision-making processes.

This is valuable knowledge. It tells us that the next phase of AI infrastructure will need to solve energy constraints, not just compute constraints. The constraint isn't how many chips you can buy. It's how much electricity you can secure and how much heat you can dissipate.

The economist Nikolai Kondratieff noticed that major technological shifts follow roughly fifty-year cycles. Steam and railways in the 1830s-1880s. Steel and electricity in the 1880s-1930s. Oil and automobiles in the 1930s-1980s. Information technology and telecommunications in the 1980s-2030s. Each cycle begins with massive infrastructure investment, often financed by speculation, and matures into decades of productivity growth.

Each wave is also bounded by physical constraints. The steam age was limited by coal supply and transportation. The electrical age was limited by generation capacity and distribution networks. The oil age was limited by refining capacity and energy density. The information age has been limited by Moore's Law and network effects.

The AI age will be limited by energy and cooling. This is becoming clear as the hyperscalers announce their spending plans. Microsoft's $80 billion isn't going primarily to GPUs. It's going to power infrastructure, cooling systems, and grid interconnects. The AI GPU chip market is forecast to grow by $145.1 billion at a 32.4% CAGR between 2024 and 2029, but the infrastructure to support those chips—particularly power and cooling—is equally critical.

This suggests where the option premium is flowing next. The current wave of infrastructure spending is discovering that AI at scale is fundamentally an energy problem. The next wave will be about solving that problem. New power sources, new cooling technologies, new chip designs optimized for efficiency rather than raw performance. We don't know which approaches will work, so we'll overfund them all and see what survives.

The case for doing nothing

After every bubble bursts, the calls for regulation intensify. We need better disclosure. Stricter limits on speculation. Protection for naive investors. Something to prevent it from happening again.

Cornell and Damodaran's policy prescription is radical in its simplicity: stop trying to make bubbles go away.

Their argument rests on a cost-benefit analysis. Yes, bubbles create volatility. Yes, investors lose money. Yes, employees lose jobs when companies fail. But the alternative is underinvestment in transformative opportunities.

Consider the counterfactual. Imagine we had somehow prevented the dot-com bubble. Regulators step in around 1998, cool the speculation, enforce strict valuation discipline. Far fewer internet companies get funded. The ones that do face higher bars for capital raising.

What would we have lost? Not just the failures, but the knowledge the failures generated. The infrastructure built by companies that no longer exist. The trained workforce that dispersed to other companies. The revealed preferences about what customers actually want rather than what surveys say they want.

Amazon's success looks inevitable in hindsight. But in 2000, the company's stock fell 90% and serious analysts questioned whether it would survive. The company was burning cash, competing against better-capitalized rivals, and operating in a market where dozens of competitors were discovering that the unit economics didn't work. Amazon survived in part because of what it learned from watching competitors fail.

Now imagine we decided to regulate the AI infrastructure build-out. Suppose we told the hyperscalers they couldn't spend $80 billion each on speculative capacity. Suppose we required neo-clouds to prove sustainable unit economics before raising capital. Suppose we blocked utilities from making thirty-year infrastructure bets on projected AI demand.

We would build less capacity. We would run fewer experiments. We would generate less knowledge about what works. And when the viable configurations of AI eventually became clear, we would lack the infrastructure to deploy them at scale.

The option premium would go unpaid. And unlike a financial option that simply expires worthless, an unpaid technology option premium has consequences. The country or region that pays the premium gains the infrastructure, the knowledge, and the network effects that come from being first. The ones that decline to pay it cede the advantage.

By 2019, Cornell and Damodaran's follow-up analysis of online advertising showed the market moderating. The number of public companies shrank. Market capitalizations adjusted toward reality. The imputed revenues for 2029, calculated the same way as their 2015 analysis, came out only slightly higher than the 2025 projections from four years earlier. This is what maturation looks like. The pricing game gives way to the value game. Growth metrics stop being enough. Companies need paths to profitability.

The installation phase was ending. The deployment phase was beginning. The option premium had been paid. Now it was time to see which options had value.

We're nowhere near that point with AI. The infrastructure build-out is accelerating, not decelerating. The capital commitments keep growing. The option premium is still being paid. As of November 2025, the scale continues to expand with Anthropic's $50 billion commitment and OpenAI's Stargate project reaching near its full $500 billion target.

Which means, if the pattern holds, we're still in the installation phase. The overbuilding will continue until it becomes obvious that capacity exceeds demand. Then the correction will come, the narrative will flip, and the market will shift from pricing to value. Some of the hyperscalers will have built too much. Some of the neo-clouds will fail. Some of the utility investments will prove unprofitable.

But the infrastructure will remain. The knowledge will persist. And the survivors will build the deployment phase on foundations that the failures created. This is how general-purpose technologies always work. The option premium gets paid during installation. The returns get realized during deployment.

The only question is whether you understand which phase you're in.