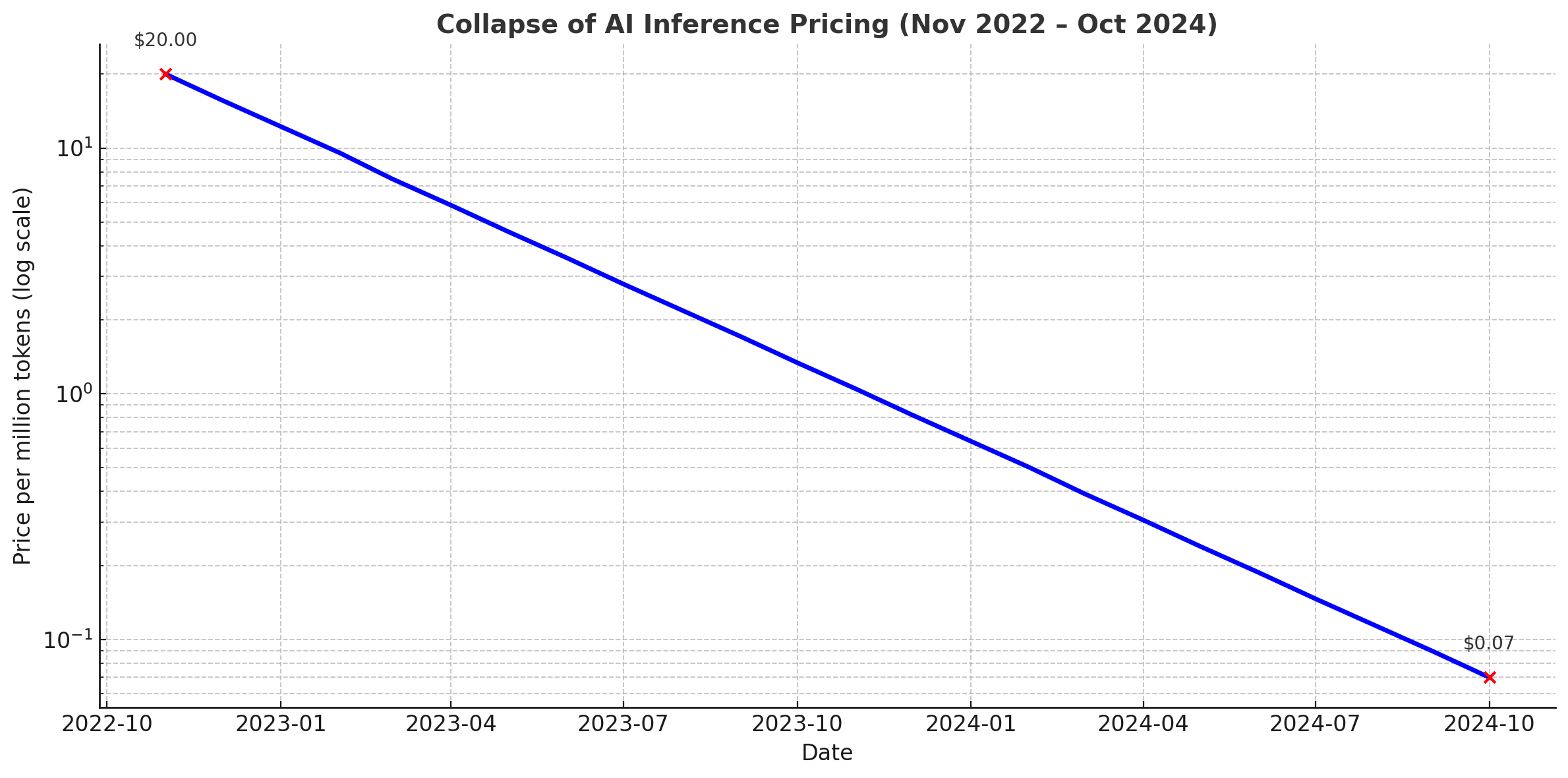

Artificial intelligence is becoming cheaper faster than anything in the history of modern technology. In just eighteen months, the cost of running a GPT-class model fell from twenty dollars per million words to seven cents. Storage took forty-five years to make a similar leap. Bandwidth took twenty-five. AI did it in less than two.

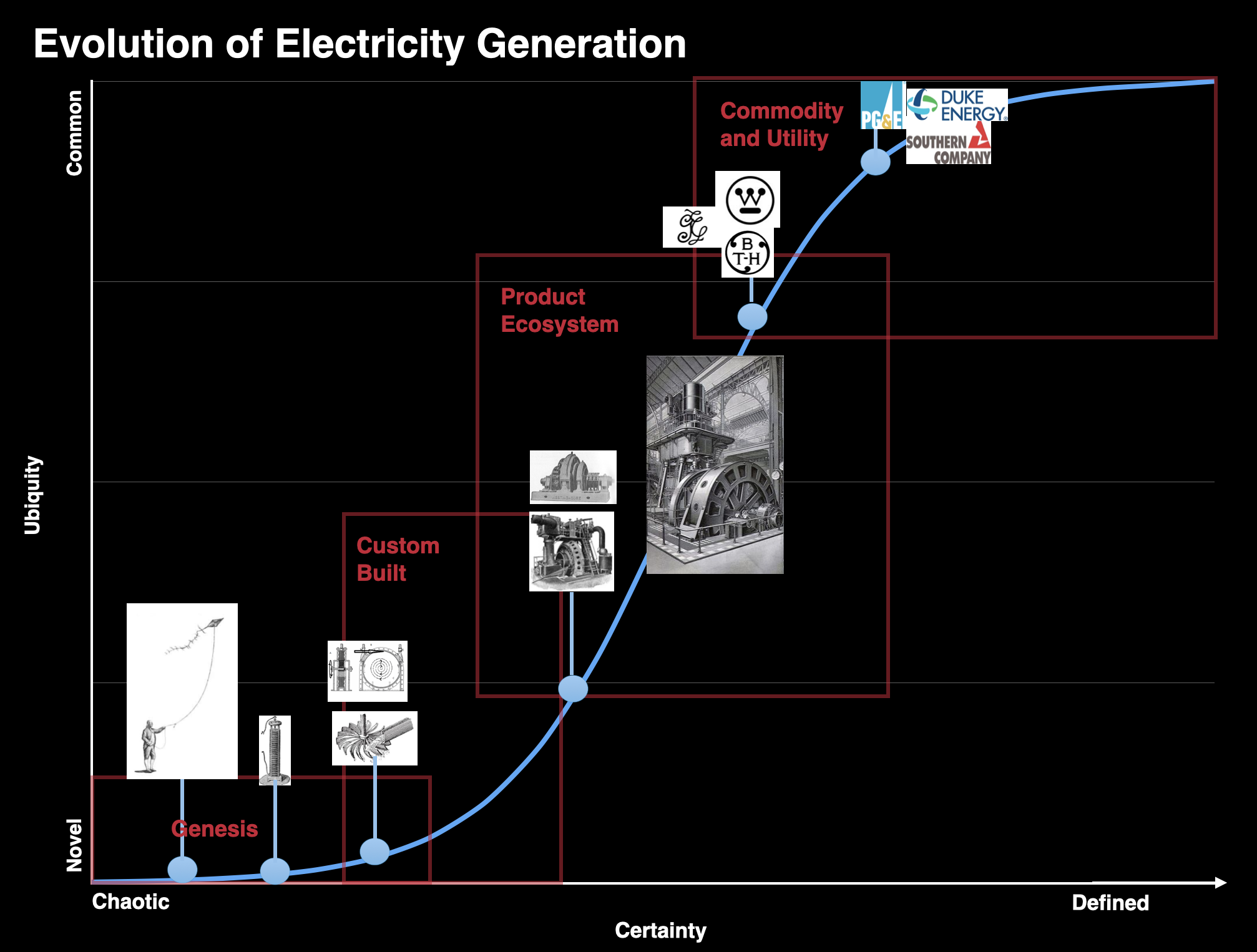

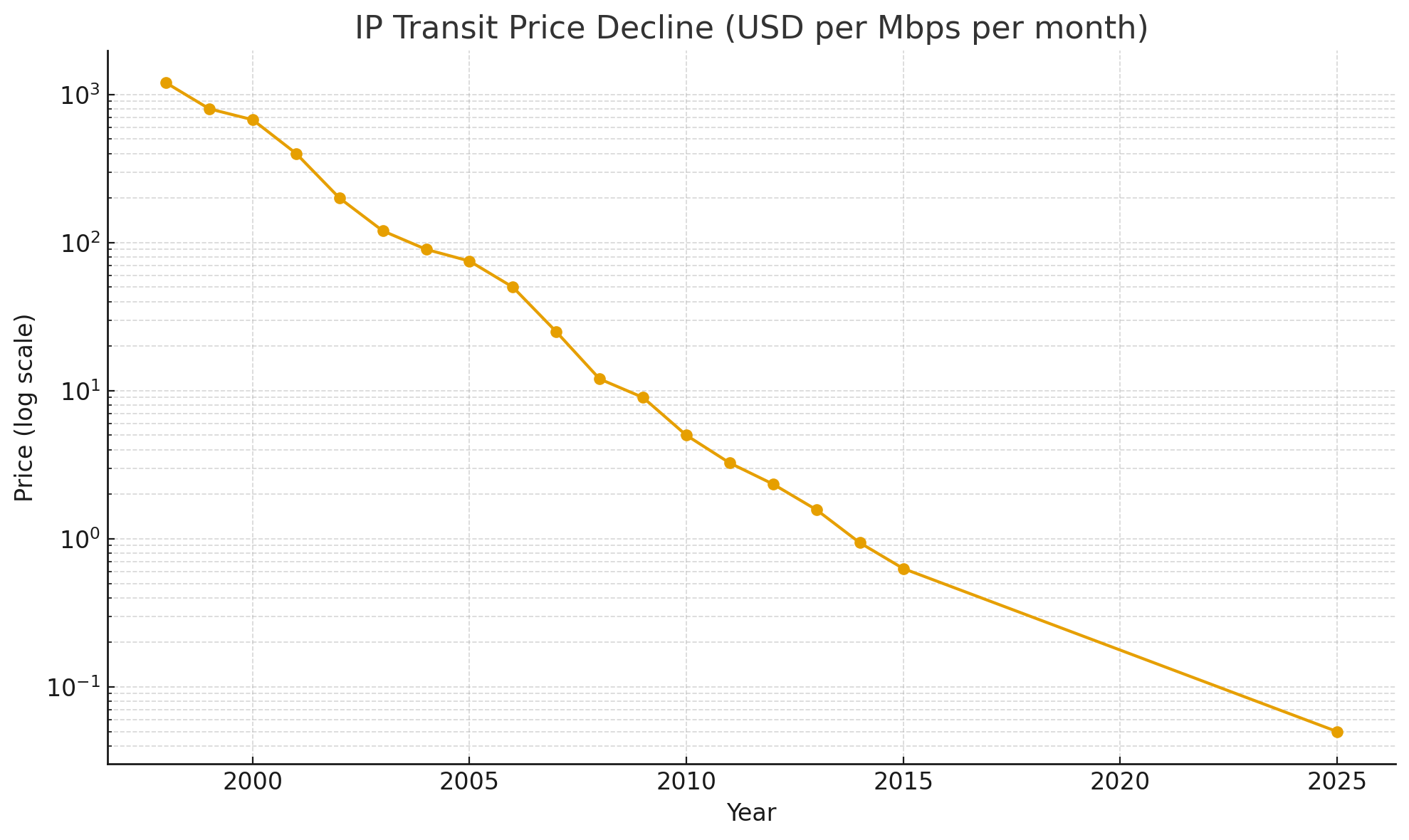

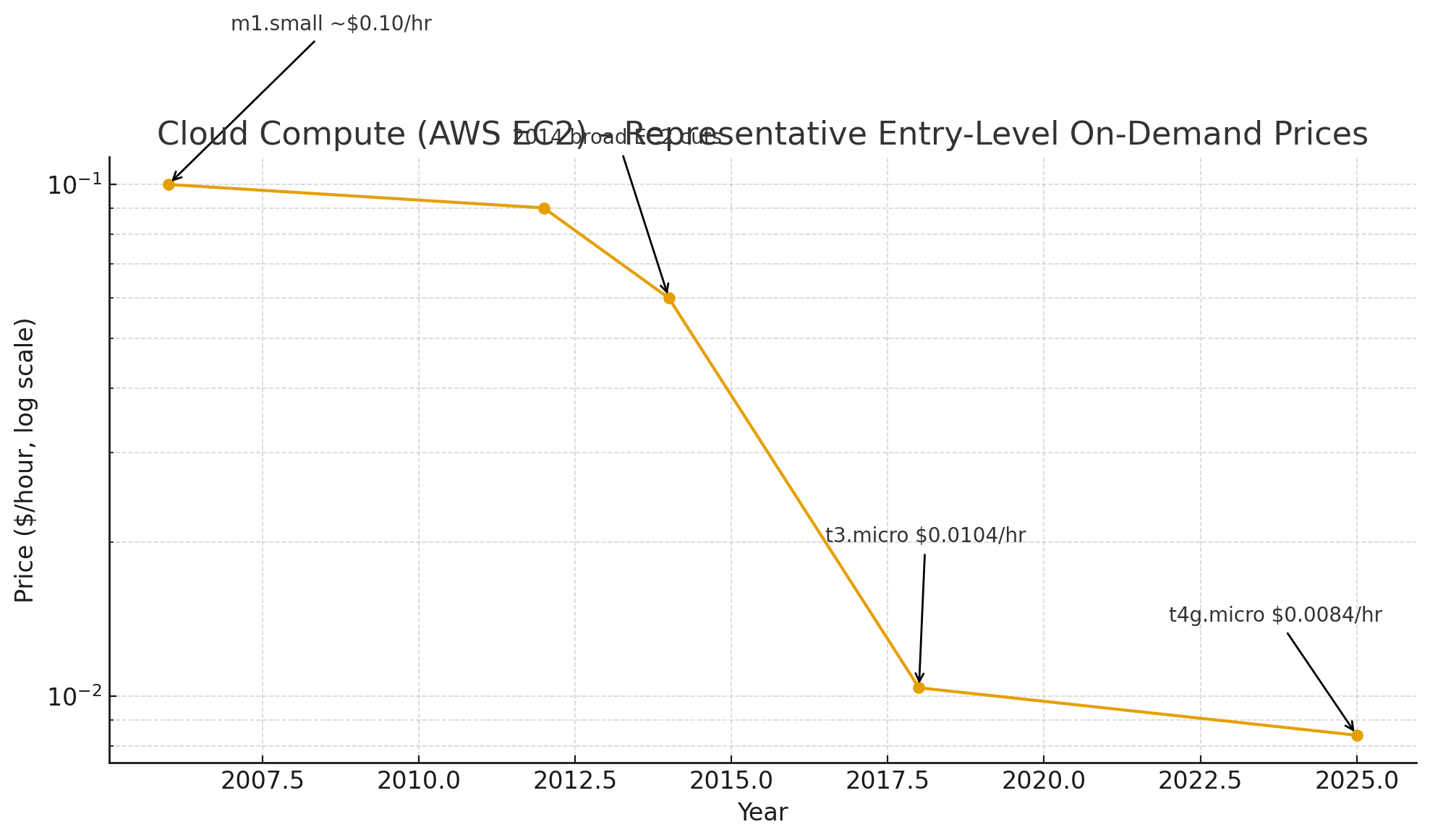

Every great technology ends in the same place. Railroads, telephones, electricity, airplanes, automobiles all began as revolutionary inventions, matured into commodity product ecosystems, and finally settled into utility models. In the march of technological progress, costs fall as adoption accelerates to ubiquity. This is the steady rhythm of Moore's Law, the slow glide of hard drive prices, the gradual thinning of bandwidth bills.

According to the AI Index Report 2025, the cost of inference has plunged from $20 per million tokens in late 2022 to $0.07 by the end of 2024. That is a 280-fold decline in just eighteen months. The annualized deflation rate is 99.7 percent. What we are witnessing with artificial intelligence is not gradual, and it is not steady. It looks more like a collapse.

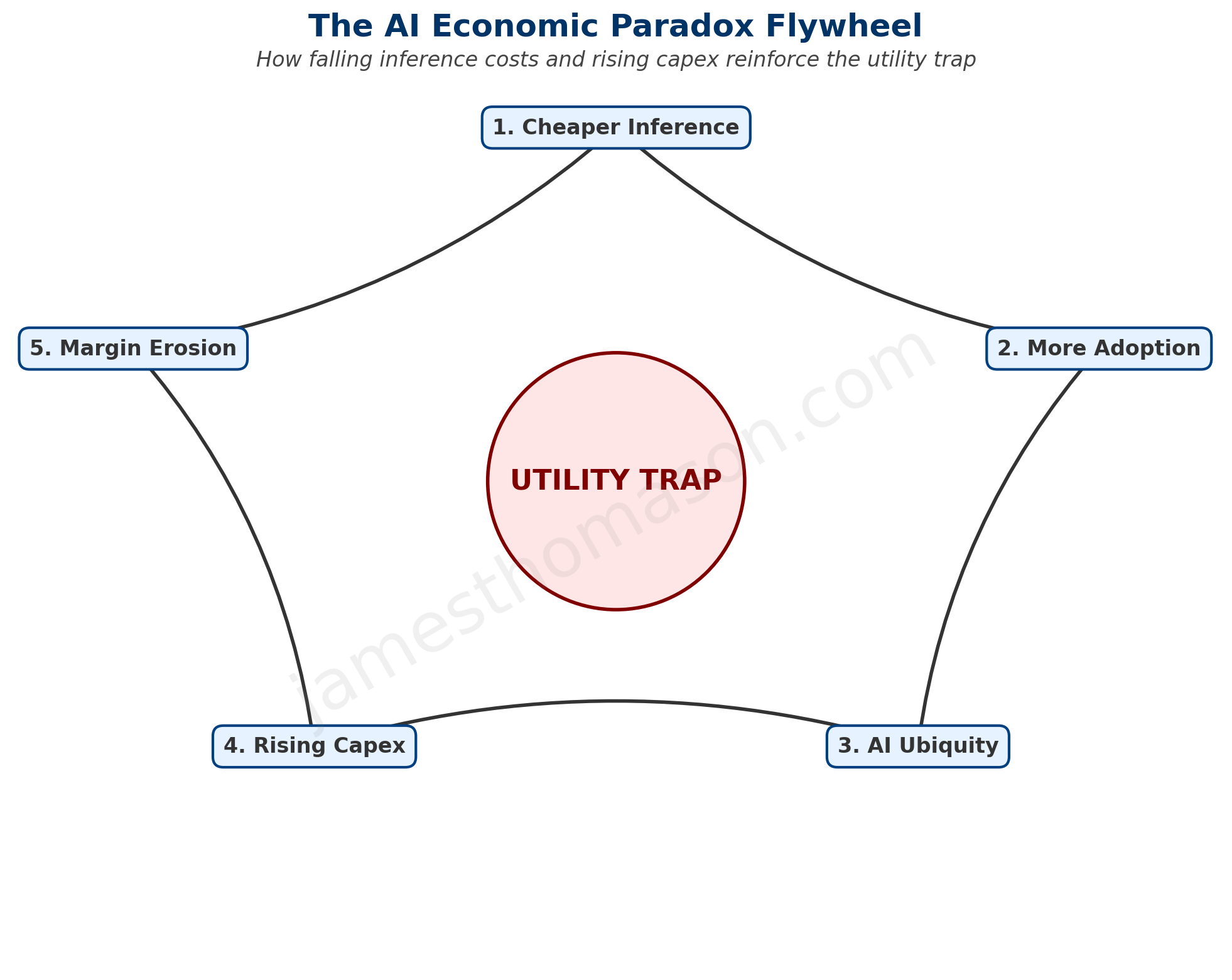

At first glance, this looks like a triumph of abundance. And on the demand side, it is. As prices collapse, AI flows into the fabric of computing. SaaS vendors embed autocomplete and summarization features. Productivity suites bundle copilots. Startups wire models into every workflow. AI becomes not a product but a layer, as ordinary and invisible as the TCP/IP protocol or the APIs that stitch applications together. Ubiquity, once the dream, is now reality.

But on the supply side, the picture is darker. Training the models that make this ubiquity possible is growing more expensive at breakneck speed. The AI Index Report notes that the original Transformer cost less than $1,000 to train in 2017. By 2023, GPT-4 was estimated at $79 million. In 2024, Meta's Llama 3.1-405B reached $170 million. Compute requirements for frontier models are doubling roughly every five months. The energy footprint rises with them measured in gigawatts.

This cost escalation has already reshaped the research landscape. In 2024, industry produced 55 notable models, while academia produced zero. Universities are priced out. What once was a field of experimentation has narrowed into a field of theory. Scholars can test ideas, but they cannot afford to build state-of-the-art models. The frontier is now corporate terrain.

Here lies the paradox. The cost to create soars even as the cost to use collapses. It is a pincer movement that squeezes even the strongest. The economics of capital investment rely on amortization. Pour billions into hardware, then earn it back slowly through usage fees. But when the unit price of usage vanishes before the capital is depreciated, the math fails. A billion-dollar training run cannot pay for itself when inference has become, for all practical purposes, free.

This is the utility trap. We saw this pattern play out brutally during the dot-com boom. Telecom companies poured hundreds of billions into fiber optic networks, convinced that bandwidth demand would justify any investment. When the bubble burst, dark fiber lay unused across continents while carriers filed for bankruptcy. Data centers suffered the same fate—vast server farms built for a future that arrived too slowly, leaving investors holding depreciating hardware and empty racks.

In the cloud era, hyperscalers partially avoided this trap. When servers, networks, and storage became commoditized, Amazon, Microsoft, and Google didn't surrender to utility margins. Instead, they climbed the value stack. Amazon and Microsoft didn't merely rent servers. They built a legion of higher-margin services on top. Microsoft turned infrastructure into Office subscriptions. Google monetized search and advertising. They used commodity infrastructure as loss-leader to capture customers in profitable ecosystems above it. Even as storage and compute margins compressed, total revenue per customer expanded. The utility trap was real, but escapable.

AI deflation should favor the hyperscalers. Unlike previous infrastructure waves, AI capabilities are so powerful and versatile that they can be bundled into virtually any service. When AI becomes nearly free, it should enable hyperscalers to add intelligence to every product they offer, creating even more value-added services and stronger ecosystem lock-in. The strategy that saved them before should work even better now – in theory.

The hyperscalers are executing the tried and true strategy. Microsoft and Google are embedding AI into existing suites, hoping to capture value through workflow lock-in rather than per-token charges. API access, now the most common release model, is less a profit center than a hook to pull customers deeper into cloud ecosystems. Proprietary data, whether regulated, domain-specific, or otherwise scarce, is being hoarded as the next moat. Energy reasserts itself as the hard floor. GPUs demand electricity, and electricity cannot be deflated below zero.

But speed changes everything. The hyperscalers are caught in a race between how quickly they can integrate AI into profitable services and how quickly AI capabilities become free for everyone. If raw AI capabilities become universally accessible before the hyperscalers can lock customers into their AI-powered ecosystems, then the bundling strategy fails. The trap is temporal, not structural.

Today, hyperscalers once again risk becoming tomorrow's electric companies or telcos. Indispensable infrastructure providers, but condemned to commodity margins. Every turn of the flywheel reinforces the trap. Cheaper inference drives adoption. Adoption produces ubiquity. Ubiquity demands more capital spending. Rising capex erodes margins. The cycle pushes inference costs lower still. Round and round it spins, with no exit. Capex budgets swell to keep ahead of rivals, even as the revenue pool beneath them contracts.

Yet even as hyperscalers sink capital into ever-larger data centers, the pendulum is swinging back toward software efficiency. Throughout computing history, whenever hardware becomes prohibitively expensive, innovation shifts to algorithmic optimization. In the 1980s, when memory cost thousands per megabyte, programmers wrote incredibly lean code. When network bandwidth was scarce in the dial-up era, engineers developed compression algorithms. When mobile processors were limited, developers created energy-efficient architectures that squeezed maximum performance from minimal silicon.

Chinese labs are following this script with DeepSeek achieving comparable performance while consuming only 5-12% of the energy, running on commodity hardware instead of expensive H100s. If the efficiency curve steepens faster than the deployment curve, then data centers full of GPUs become stranded assets. In other words, efficiency gains threaten to spring the utility trap by making the hyperscalers' expensive infrastructure unnecessary before it can be amortized.

The question lingers. If inference becomes free, who will fund the next $170M training run? Who sustains the research when the product itself has become a commodity utility? The specter is a deflationary spiral. Yesterday's breakthroughs become universal, but tomorrow's breakthroughs go unfunded.

The paradox of AI is not that it fails. It is that it succeeds too well. Its abundance undermines its economics. Its ubiquity erodes its value. The utility trap yawns open, and the giants of technology lumber toward it willingly, their arms full of GPUs, their pockets lightened by their own momentum.